See How AI Stereotypes You

Computers think they know who you are. Artificial intelligence algorithms can recognize objects in images, even faces. But we can rarely look under the hood of facial recognition algorithms. Now, thanks to ImageNet Roulette , we can watch AI draw conclusions. Some guesses are ridiculous, others … racist.

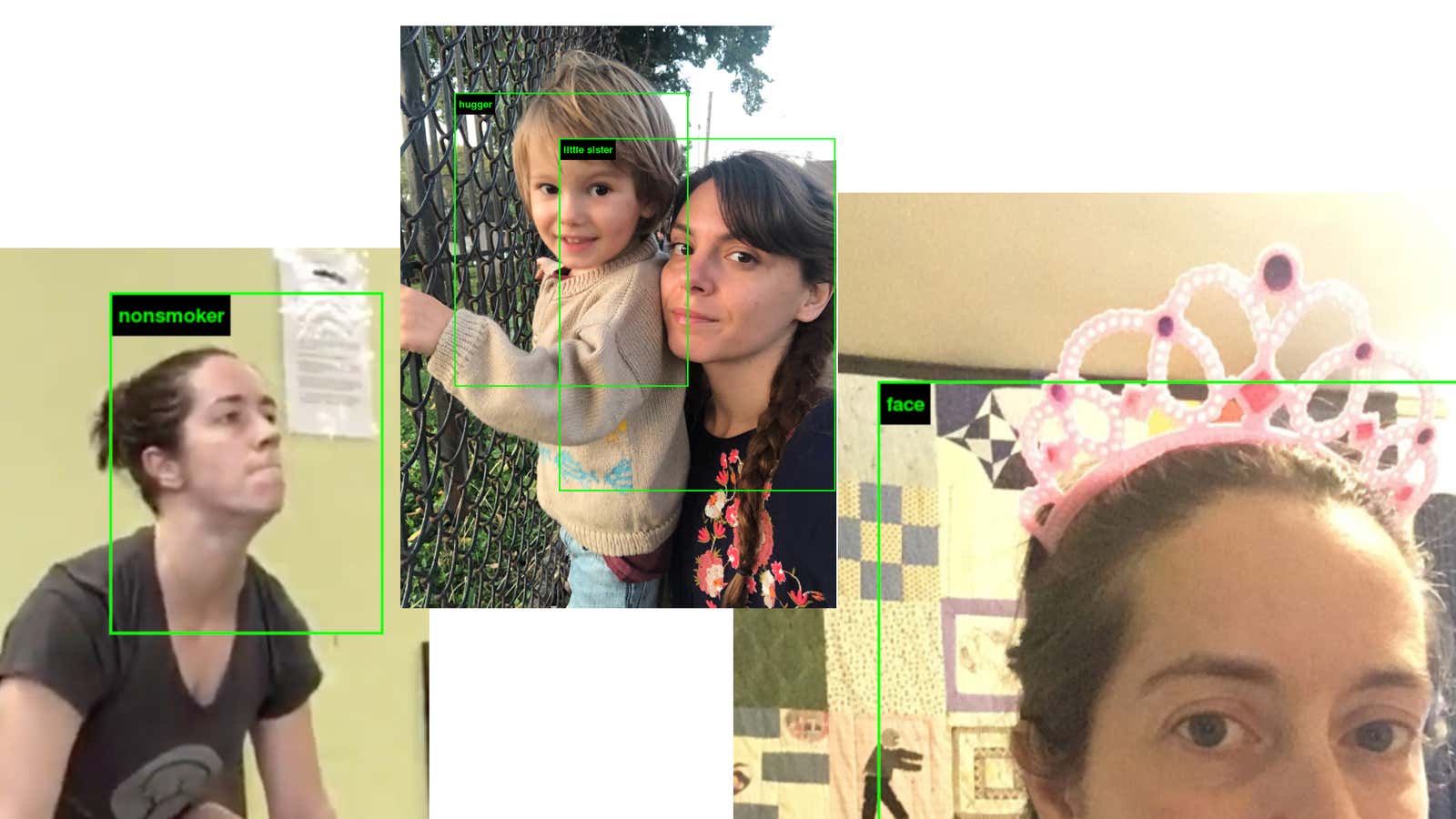

ImageNet Roulette was designed as part of an exhibition at the Museum of Art and Technology called Teaching People to show us the intricate internals of facial recognition algorithms that we might otherwise find simple and unbiased. It uses data from one of the large standard databases used in artificial intelligence research. Upload a photo and the algorithm will show you what you think of you. My first selfie was labeled “non-smoker”. The other was simply labeled as “face.” Our editor-in-chief was called a “psycholinguist.” Our social media editor has been tagged “swot, grind, nerd, wonk, dweeb”. Harmless entertainment, right?

But then I tried taking a photo of myself in darker lighting and it came back with the label “Black, Black Man, Blackamoor, Negro, Negroid”. It actually appears to be an AI shortcut for anyone with dark skin. Things are even worse: on Twitter threads discussing the tool, people of color consistently receive this tag along with others like “mulatto” and some definitely nasty labels like “orphan” and “rape suspect.”

These categories are in the original ImageNet / WordNet database and not added by the creators of the ImageNet Roulette tool. Here’s a note from the latter:

ImageNet Roulette regularly categorizes people in questionable and violent forms. This is because the underlying training data contains these categories (and images of people who have been tagged with those categories). We did not blame the underlying training data for these classifications. We imported categories and training images from the popular ImageNet dataset, which was created at Princeton and Stanford Universities and is a standard test used for image classification and object detection.

The ImageNet Roulette is in part intended to demonstrate how different kinds of policies are propagated through technical systems, often even if the creators of those systems are not even aware of them.

Where do these shortcuts come from?

The tool is based on ImageNet, an image and label database that has been and remains one of the largest and most readily available sources of training data for image recognition algorithms. According to Quartz, it was compiled from images collected online and tagged mostly by Mechanical Turk workers – people who categorized the images en masse for pennies.

Since the creators of ImageNet do not own the photographs they have collected, they cannot simply give them away. But if you’re interested, you can look at the photo tags and get a list of URLs that were the original sources of the photos. For example, “person, person, someone, someone, mortal, soul”> “scientist”> “linguist, linguist scientist”> “psycholinguist” leads to this list of photographs , many of which appear to be from the web -sites of university faculties.

Viewing these images gives us an idea of what happened here. Psycholinguists are generally white people who are photographed in this way. If your photo looks like theirs, you may have been tagged as a psycholinguist. Likewise, the other tags depend on how similar you are to the training images with these tags. If you are bald, you may be flagged as a skinhead. If you have dark skin and fashionable clothes, you may be tagged as wearing African ceremonial attire.

ImageNet is by no means an impartial and objective algorithm, it reflects bias in the images that its creators collected, in the society that created these images, in the minds of mTurk employees, in the dictionaries that gave the words for the labels. Someone or a computer put “blackamoor” in an online dictionary a long time ago, but since then, many must have seen “blackamoor” in their AI tags (in fucking 2019!) And didn’t say, “Wow, let’s delete it”. Yes, algorithms can be racist and sexist because they found out by watching us, okay? They learned this by watching us.